Breaking Physical Hardware Limits with AI-Enabled Ultra-High-Speed Structured-Light 3D Imaging

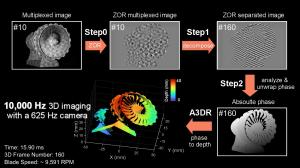

Schematic illustration of the DFAMFPP principle: Multiple dual-frequency fringe patterns are overlaid in a single long-exposure image. A deep neural network then reconstructs time-resolved 3D shapes from the multiplexed spatial spectrum.

Rapid progress in optoelectronic technologies has made 3D imaging and sensing one of the most critical frontiers in optical metrology and information optics.

CHENGDU, SICHUAN, CHINA, August 20, 2025 /EINPresswire.com/ -- Since the era of film photography, capturing and documenting high-speed dynamic processes has been a longstanding pursuit in science and engineering. With the rise of solid-state imaging technologies such as charge-coupled devices (CCD) and complementary metal-oxide-semiconductor (CMOS) sensors, high-speed imaging has garnered increasing attention and become a cornerstone in fields such as aerospace, industrial inspection, and national defense.Conventional imaging sensors primarily record two-dimensional (2D) image sequences that lack depth information. In recent years, the rapid progress in optoelectronic technologies has made 3D imaging and sensing one of the most dynamic and critical frontiers in optical metrology and information optics. Among these, Fringe Projection Profilometry (FPP) has emerged as a leading non-contact 3D surface measurement technique due to its high accuracy, flexible encoding, and wide applicability. Nevertheless, conventional structured-light 3D imaging techniques such as FPP always rely on a one-to-one synchronization between pattern projection and image acquisition, fundamentally limiting the system’s temporal resolution to the native frame rate of the imaging sensor. Current methods to increase speed often depend on high-refresh-rate hardware, which significantly increases system complexity and cost. This hardware bottleneck has become a major obstacle in advancing high-speed and ultra-high-speed 3D imaging.

To overcome this limitation, Frequency Division Multiplexing (FDM)—a classical information multiplexing technique in communication systems—offers a promising solution. As early as 1997, Professor Takeda has introduced FDM into FPP, enabling two fringe patterns of different frequencies to be superimposed onto a single image, thus allowing simultaneous phase demodulation and unwrapping. Similarly, in off-axis digital holography, researchers overlapped holograms captured at different time points within a single exposure, enabling multi-temporal holographic reconstruction from just one multiplexed image.

Yet this multiplexing comes at a cost. “Think of it like trying to listen to several conversations at once on different radio frequencies—unless you can tune in precisely, everything becomes noise,” explained Prof. Zuo. The resulting frequency domain becomes highly complex, and the filtering and inversion steps in the Fourier domain become ill-conditioned. Signal recovery suffers from spectral overlap, leakage, and crosstalk, leading to significant degradation in reconstruction accuracy, resolution, and robustness—far from meeting the demands of practical applications.

Research Highlights:

In response to these challenges, researchers from Nanjing University of Science and Technology (NJUST) led by Professors Qian Chen and Chao Zuo collaborated with Professors Malgorzata Kujawinska and Maciej Trusiak from Warsaw University of Technology. Together, they have developed a novel deep learning-enabled 3D imaging method: Dual-Frequency Angular-Multiplexed Fringe Projection Profilometry (DFAMFPP). Leveraging the high temporal resolution of digital micromirror devices (DMD), DFAMFPP projects multiple sets of dual-frequency fringe patterns within a single camera exposure period, encoding multiple moments of 3D information into a single multiplexed image.

“Imagine condensing a full video into a single photograph, and then teaching an AI to unfold it frame by frame,” said Prof. Zuo. “That’s exactly what DFAMFPP does—with physics and neural networks working hand in hand.”

A novel two-stage deep neural network—designed with number-theoretical principles—then demultiplexes multiple high-precision absolute phase maps from this single image. As a result, DFAMFPP achieves high-speed, high-precision absolute 3D surface measurements at speeds 16 times faster than the sensor’s native frame rate.

The researchers validated their method by dynamically measuring a high-speed turbofan engine prototype rotating at approximately 9600 RPM. Remarkably, using only a 625 Hz standard industrial camera, they successfully achieved 10,000 Hz 3D imaging, capturing fine structural details of the rapidly spinning blades. “Even though the blades were barely visible in the raw image due to motion blur,” noted Prof. Zuo, “our system successfully reconstructed their full 3D shape by unlocking the high-frequency cues hidden in the data.

By combining deep learning and computational imaging, DFAMFPP utilizes frequency-domain multiplexing to reconstruct multiple 3D frames from a single exposure—without sacrificing spatial resolution. This breakthrough overcomes the longstanding frame-rate limitation of imaging sensors, opening new possibilities for studying high-speed dynamic processes using low-frame-rate devices. In the future, DFAMFPP may be integrated with streak imaging, compressed ultrafast photography, and other advanced methods to achieve 3D imaging at rates exceeding one million frames per second. This would enable unprecedented visualization of ultra-fast phenomena such as shockwave propagation and laser–plasma interactions, offering transformative tools for frontier scientific exploration.

This work, titled “Dual-Frequency Angular-Multiplexed Fringe Projection Profilometry with Deep Learning: Breaking Hardware Limits for Ultra-High-Speed 3D Imaging”, has been published in the 2025 (8) issue of Opto-Electronic Advances.

About the researchers:

The Smart Computational Imaging Laboratory (SCILab: www.scilaboratory.com) is affiliated to the “Spectral Imaging and Information Processing” Innovation Team of the Ministry of Education's Changjiang Scholar and the first batch of “National Huang Danian-style Teacher Team”, led by Professor Qian Chen, the leader of the national first-level key discipline of optical engineering at Nanjing University of Science and Technology. Professor Chao Zuo, the academic leader of the laboratory, is a distinguished professor of the Ministry of Education’s Changjiang Scholars Program, a Fellow of SPIE | Optica | IOP, and is selected as a Clarivate Analytics Highly Cited Scientist in the World. The laboratory is committed to developing a new generation of computational imaging and sensing technologies. Driven by major national needs and supported by key projects, the laboratory conducts engineering practice to explore the mechanism of new optical imaging and develops advanced instruments, and explores its cutting-edge applications in biomedicine, intelligent manufacturing, national defense and security, and other fields. The research results have been published in more than 270 SCI journals, of which 46 papers were selected as cover papers of journals such as Light, OEA, Optica, etc., 25 papers were selected as ESI highly cited/hot papers, and the papers have been cited nearly 20,000 times. Professor Zuo has won the Fresnel Prize of the European Physical Society, the first prize of the Technological Invention Award of the Chinese Society of Optical Engineering, the first prize of the Basic Category of the Jiangsu Science and Technology Award, and the “Special Commendation Gold Award” of the Geneva International Invention Exhibition. In terms of talent training, 6 doctoral students in the laboratory won the National Optical Engineering Outstanding Doctoral Dissertation/Nomination Award, 3 won the SPIE Optics and Photonics Education Scholarship, and 5 won the Wang Daheng Optics Award of the Chinese Optical Society. The student team has won 15 gold/special prizes in the national competition of “Challenge Cup”, “Internet+”, “Chuang Qingchun”, and “The China Graduate Electronics design contest”, as well as the national championship of “Internet+” in 2023.

Read the full article here: https://www.oejournal.org/oea/article/doi/10.29026/oea.2025.250021

Andrew Smith

Charlesworth

+ +44 7753 374162

marketing@charlesworth-group.com

Visit us on social media:

LinkedIn

YouTube

Other

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.